Discover our

resources

Materials required:

- 1 robot minimum

- 1 computer/robot

- Minimum individual arena

Software configuration :

- example configuration: "Reinforcement learning - obstacle avoidance

Duration :

30 minutes

Age :

Ages 8 and up

The advantages of this activity :

- Introducing reinforcement learning

- Understanding the "try and learn" principle = pedagogical benefits

- Can be performed with the simulator

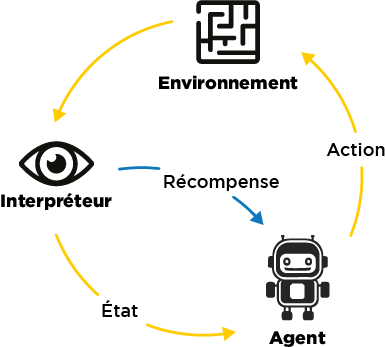

This activity involves observing a robot learning autonomously, based on the principle of reinforcement learning. Some general conclusions can be drawn about the conditions required for effective learning.

[Video content coming soon]

Introduction

In this activity, we're not training the robot: it's the robot itself that will create the examples it uses to make the right decisions about which actions to take.

To help the robot distinguish between good and bad decisions, the algorithm includes a reward system.

Here, the robot receives the following rewards for its actions:

- advance: 100 points

- turn: 82 points

- back off: -50 points

- The robot also receives a penalty of -50 points for blocking.

In the "Level" bar, you can see the average reward score obtained by the robot. Its aim is to maximize this score, so it will gradually learn to choose the actions that will bring it the optimum rewards, taking into account what its sensors are telling it.

We'll now be able to observe the robot's progress as it learns. Start by setting up an obstacle-free arena, and place the robot in it.

Learning to use the robot independently

In the AlphAI software, select the example configuration: "Reinforcement learning - obstacle avoidance".

In this activity, the robot teaches itself!

Start the autonomous mode, and watch your robot. How does it behave?

When the robot has identified a rewarding action (e.g. turning), it may decide to perform only that action from now on.

We'll now activate the "explore" button: from time to time, the robot will decide to perform a random action. This will enable it to discover that the action it would have chosen may not be the optimal reward, and to enrich its learning with new data.

Normally, he learns in succession to go straight ahead, to turn around when he bumps into an obstacle, and then to recognize these obstacles in advance and turn before bumping into them! This learning process takes about 10 minutes.

Don't hesitate to deactivate the "exploration" mode when the robot starts to choose the right actions to perform.

Review and feedback

Once training is complete, stop your robot and click on "graphs" in the bottom bar (if the button doesn't appear, change the parameter display to "advanced" or "expert"). Normally, the values should be very high at the start of the activity, then gradually tend towards zero: the robot has trained itself not to make any more mistakes!

The way reinforcement learning works can be summarized as follows:

What's needed to teach the robot :

- Repetition of the same task: the robot doesn't immediately find the right solution, but only improves through repetition.

- Exploration: the robot may need to try out new ideas, to avoid being locked into a non-optimal solution.

- Rewards or penalties : the robot needs external help to assess whether its decisions are optimal or not.

- Room forerror: the robot learns from its mistakes without getting discouraged! Mistakes help it learn which actions not to repeat!

%402x.svg)