Discover our

resources

Materialsrequired:

- 1 robot

- 1 computer

- 1 balloon (preferably green)

- Recommended: gantry and string to hang the ball high, arena

The advantages of this activity :

- Fun

- Popular with all ages

- Perfect for introducing the principle of reinforcement learning

Duration :

40 to 60 minutes

Age :

+ 8 years

Software configuration:

Balloon tracking mode (green)

The aim is to use reinforcement learning to train the robot to follow a ball. Based on the principle of trial and error, the robot gradually adjusts its behavior in response to feedback from its environment, represented by rewards.

Introduction

Build your arena with a green balloon suspended in the center, serving as a visual cue for the robot. The robot must learn to follow it as it explores the space. Ensure good floor/wall contrast, even lighting and a clean floor to guarantee reliable detection, smooth mobility and optimal observation conditions.

Setting

Connect the robot to the computer and select the "ball tracking (green)" example configuration.

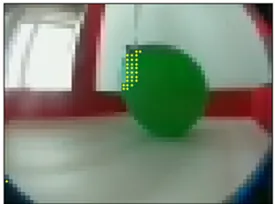

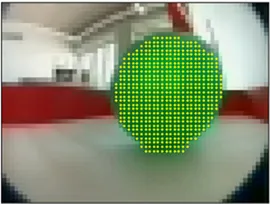

It's important to check that, when the robot looks at the ball, the green pixels of the ball are correctly identified. This means that small yellow marks appear in the image captured by the camera. No other green pixels should be visible elsewhere in the image.

If this is not the case, you will need to adjust the color detection parameters in the "Rewards" tab to obtain a satisfactory result.

Make sure that the ball remains correctly detected, even when viewed from different angles.

Training

Activate the "Stand-alone" button to start the learning phase.

The robot then enters the exploration phase, during which it adopts a trial-and-error strategy. It performs different actions at random to explore the possibilities open to it. This stage is crucial for the robot to discover for itself the consequences of its choices.

For each action performed, the robot receives feedback in the form of a positive, negative or zero reward . This mechanism encourages it to favor beneficial behaviors and avoid those that are inefficient or counter-productive. Gradually, it refines its strategy and improves its performance.

You'll notice a noticeable evolution in its actions: random and irrelevant movements become rarer, while efficient behaviors become stronger. In this way, the robot tends to concentrate more and more on its predetermined final objective.

Learning test

When the robot's behavior becomes consistent (i.e. it follows the ball efficiently and regularly): Deactivate the "Exploration" button.

At this stage, the robot only applies the actions it has learned to be most effective, without introducing random exploration. This ensures more stable, predictable and optimized operation, based on the experience gained during the exploration phase.

Conclusion

Here, we have used a reinforcement learning algorithm. Through a trial-and-error phase guided by a reward system, the robot gradually learns to adopt optimal visual tracking behavior.

At each step, the agent performs an action that changes the state of the environment. The agent must then decide whether to explore the environment further to discover new rewards, or to exploit its current knowledge to choose the most profitable actions in a given state. This behavior reflects the fundamental trade-off in reinforcement learning between exploration and exploitation.

A successful learning strategy therefore implies a progressive reduction in unnecessary trials, as well as increasingly adapted and consistent autonomous behavior over time.

%402x.svg)