Discover our

resources

Equipment:

- AlphAI Robots

- 1 PC/robot

- Arena

Strengths:

- Global overview of robotics and AI

- Clarifies the different concepts (AI ≠ programming; supervised learning ≠ reinforcement learning)

Duration :

3 hours

Age :

From age 10

Configurations:

The four manipulations use configurations that already exist in the software.

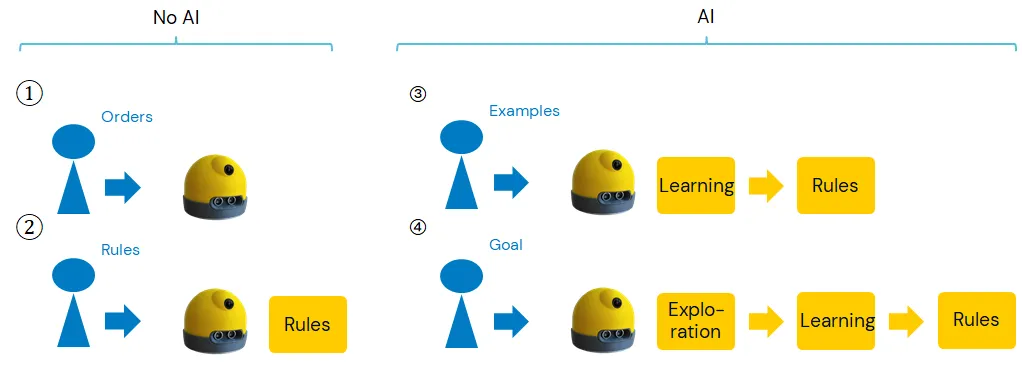

This educational sequence of four experiments provides a clear understanding of the different ways of controlling a machine, from remote control (total lack of autonomy for the machine) to reinforcement learning (the machine learning "on its own"). However, we show that regardless of the level, it is humans who remain in control of the machine, as only they can set a goal for it.

We will also clearly explain the difference between what is now called "Artificial Intelligence," namely systems where there is machine learning (levels 3 and 4), as opposed to deterministic programming (level 2).

The supporting slides illustrate the procedures with short videos and provide a summary diagram for each one.

These four steps are:

1. Remote control: the user loads the "remote control" configuration and then chooses the actions that the robot will perform. They also familiarize themselves with the software. The robot has no autonomy, as it is the human who chooses its actions at all times.

2. Programming: the user loads the "blocked versus movement" configuration and must then decide on the robot's decision rules depending on whether its sensors measure that it is blocked or unblocked (the connections to be set up in what turns out to be a mini artificial neural network must be selected). The user sets the program and then presses the "Autonomous" button to test it. The "Reward" and "Level" displays show values that increase as the robot advances without getting stuck: the activity can be presented as a game in which the goal is to maximize the level the robot will reach (NB: the "level" is the average of the "rewards" obtained in one minute; this concept of reward will be revisited in level 4, "reinforcement learning").. By doing this activity, the user has "programmed" the robot: the robot can then operate autonomously, but always executing what has been programmed. The activity can be repeated with other configurations in the "Manual Editing" category, which increase in difficulty. The need for a robot to have sensors in order to be autonomous can also be emphasized.

3. Supervised Learning: users load the "robot race" configuration and train their robots to navigate a circuit. See the robot race activity for more details. The main difference from the previous "programming" level is that users no longer directly set the calculation rules for analyzing sensor data; instead, they simply provide examples from which the AI finds ("learns") the rules it needs to use on its own.

4. Reinforcement Learning: users load the "supervised learning - obstacle avoidance" configuration and simply press the "Autonomous" button. The robots begin to learn, no longer from examples provided by humans, but from their own experiences, which they accumulate by conducting their own "explorations," i.e., through trial and error. The reward is displayed ( along with the level, which calculates an average reward): it is this reward that the AI seeks to maximize. The reward is not part of the AI; it is an auxiliary program that analyzes the robot's movements and blockages. Humans are therefore not absent from the loop, as they are the ones who decided on the reward mode in order to train the AI to behave as they decided. More details on this level can be found in the obstacle avoidance activity.

%402x.svg)